mygrad.nnet.activations.sigmoid#

- mygrad.nnet.activations.sigmoid(x: ArrayLike, *, constant: Optional[bool] = None) Tensor[source]#

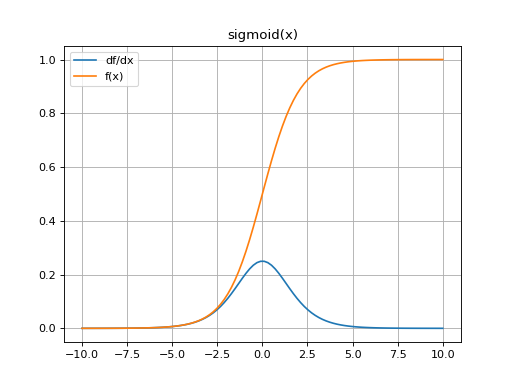

Applies the sigmoid activation function:

f(x) = 1 / (1 + exp(-x))

- Parameters

- xArrayLike

sigmoid is applied element-wise on

x.- constantOptional[bool]

If

True, the returned tensor is a constant (it does not back-propagate a gradient)

- Returns

- mygrad.Tensor

Examples

>>> import mygrad as mg >>> from mygrad.nnet import sigmoid >>> x = mg.linspace(-5, 5, 10) >>> sigmoid(x) Tensor([0.00669285, 0.02005754, 0.0585369 , 0.1588691 , 0.36457644, 0.63542356, 0.8411309 , 0.9414631 , 0.97994246, 0.99330715])

(Source code, png, hires.png, pdf)